LLaDA

Combines Aspects of Large Language Models and Diffusion Models

What Is LLaDA:

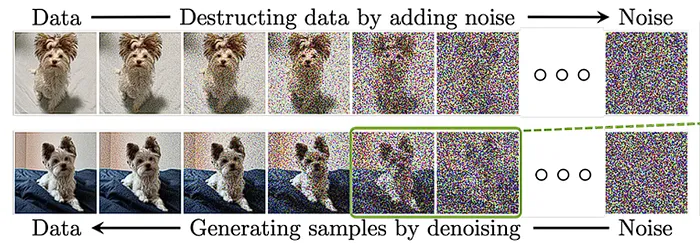

LLaDA is a new large language model based on the diffusion model. It draws on the idea of diffusion models in the field of image processing and applies this technology to the field of natural language processing. By simulating the generation process of text data, LLaDA can generate complete and coherent text content for part of the information, thereby realizing the generation and understanding of text data.

How Does LLaDA Work:

LLaDA works based on a diffusion model, gradually adding noise to the data through a forward process, and then learning how to recover the original data from the noise through a reverse process. Specifically, the workflow of LLaDA is as follows:

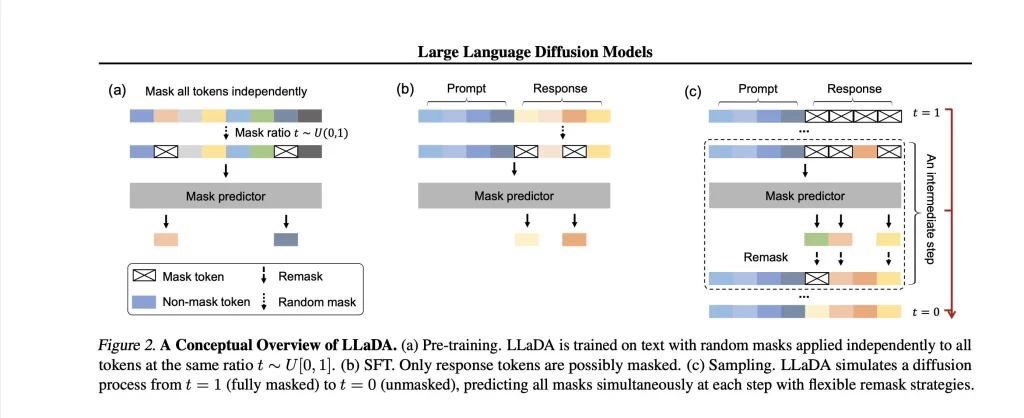

Pre-training stage: Randomly mask some tokens of the input text, and train the model to predict the masked positions.

Supervised Fine-tuning (SFT) stage: Only mask the response part that needs to be generated, guiding the model to produce content that meets specific instructions.

Generation stage: Gradually unmask through diffusion sampling, predicting all masked tokens simultaneously in each iteration, eventually generating complete text. This approach allows the model to dynamically adjust logic during the generation process, similar to the human thinking process that progresses from vague to clear.

Differences Between LLaDA and Traditional LLMs:

| Comparison Dimension | Traditional LLM | LLaDA |

|---|---|---|

| Generation Mechanism | Autoregressive Token Generation (Unidirectional Dependency) | Parallel Diffusion Generation (Global Optimization, Bidirectional Adjustment) |

| Refinement Capability | Error Accumulation Effect Is Significant | Diffusion Process Allows Correction of Logical Contradictions Midway |

| Long Text Generation | Limited by Attention Window | Global Consistency Optimization |

| Inference Speed | Token Serial Computation, Slower Speed | Parallel Generation, 2-5 Times Faster |

| Training Objective | Probability of Predicting the Next Word | Learning the Mapping Relationship from Noise to Text |

What Is LLaDA Application:

Text Generation: can be used to generate various types of text, such as articles, stories, code.

Instruction Following: LLaDA trained with SFT can follow instructions well and can be used to build dialogue systems, intelligent assistants.

Context Learning: LLaDA performs exceptionally well in context learning, and can generate relevant text based on the given context without explicit training.

Code Generation: can generate code based on user requirements.

Translation: LLaDA can perform multi-lingual translation.

How can I access or use LLaDA?

Download source code: Clone or download the LLaDA code repository from the GitHub page.

Install dependencies: Install the required environment and dependencies according to the instructions in the documentation.

Fine-tune and apply: Fine-tune and test the model according to your data and task requirements.

How Does LLaDA Differ From Traditional Language Models Like GPT or BERT:

| Comparison Dimension | LLaDA | GPT | BERT |

|---|---|---|---|

| Underlying Mechanism | Diffusion-based generation | Autoregressive generation | Masked language modeling |

| Text Generation Process | Parallel, iterative denoising | Sequential, token-by-token | Not primarily designed for generation |

| Directional Context | Bidirectional with global optimization | Unidirectional (left-to-right) | Bidirectional attention |

| Inference Speed | 2-5× faster than autoregressive models | Limited by sequential decoding | Fast for encoding, not for generation |

| Error Correction | Can correct logical inconsistencies during diffusion | Errors cascade to subsequent tokens | Simultaneous prediction of masked tokens |

| Long-form Content | Better handles long-distance dependencies | Can drift or lose coherence | Limited by fixed context length |

| Training Objective | Minimize noise in diffusion process | Maximize next token probability | Predict masked tokens from context |

| Generation Flexibility | Can adjust content bidirectionally | Only forward adjustment possible | Fill-in-the-blank capability |

How Does LLaDA Handle Discrete Text Data:

Continuous embedding space: Map discrete Tokens to high-dimensional continuous vectors (e.g., 512 dimensions), and perform diffusion operations in the vector space.

Discrete noise scheduling: Design Token-level noise perturbation algorithms to ensure gradual semantic degradation (such as: random replacement or deletion of Tokens).

Masked denoising optimization: Use neural networks to learn the conditional probability of recovering the original Token distribution from noisy vectors, and generate text by gradually unmasking.

What are the main limitations of LLaDA:

High demand for computing resources: Due to its complex model architecture and diffusion process, LLaDA requires a large amount of computing resources for training and inference. This may limit its application in resource-constrained environments.

Long training time: The training process of diffusion models is generally more complex and takes longer than traditional autoregressive models. This may affect the iteration speed and update frequency of the model.

Slower generation speed: Due to the iterative nature of the denoising process, LLaDA may be slower in generating text than other models (such as GPT) during real-time applications.

Sensitivity to noise: Although diffusion models have advantages in handling noisy data, LLaDA may still be sensitive to input data noise, requiring fine-tuning and preprocessing.

Frequently Asked Questions:

Is LLaDA an open-source model:

LLaDA is the first open-source diffusion large language model, providing a code library for users to conduct research and modifications. However, it should be noted that LLaDA does not provide a complete training framework and dataset like many open-source large language models. Currently, only the basic implementation of pre-trained models and fine-tuning is provided, and users need to modify and adapt the code according to their own needs.

Is there an API available for LLaDA:

There is currently no official API. LLaDA mainly provides access through open source code and online demos, and users need to deploy the model or integrate it into their local system themselves.

What Training Datasets Are Used for LLaDA:

Common public datasets include Wikipedia, Common Crawl, OpenWebText, and others. These datasets contain a large amount of text data that can be used to train large language models. To improve the model’s performance in specific domains, LLaDA may use some domain-specific datasets, such as medical literature, legal documents, and scientific papers. Additionally, to support multilingual tasks, LLaDA will also use multilingual datasets for training.